Introduction to generative AI

Talking about Artificial Intelligence (AI) induces the bias of picturing intelligence according to our human perception of what it can be. Its contours are ill-defined, but we generally agree to have a common understanding that relates to our ability to perform certain tasks with a degree of skill. The computer analogy, by adding the qualifier "artificial," does not help in understanding what AI is or what it can do, as it does not share the same skill mapping.

The term refers to a field of computer science. The technologies it encompasses, often used in combination, enable computers to perform a number of specialized tasks. The main characteristic, compared to a conventional computer program, is the autonomy of AI. It is designed to learn and improve over time, it can reason, but it can also perceive external stimuli and interact with its environment. For these reasons, AI is capable of performing a number of complex tasks, typically human-specific, and generally associated with our conception of intelligence.

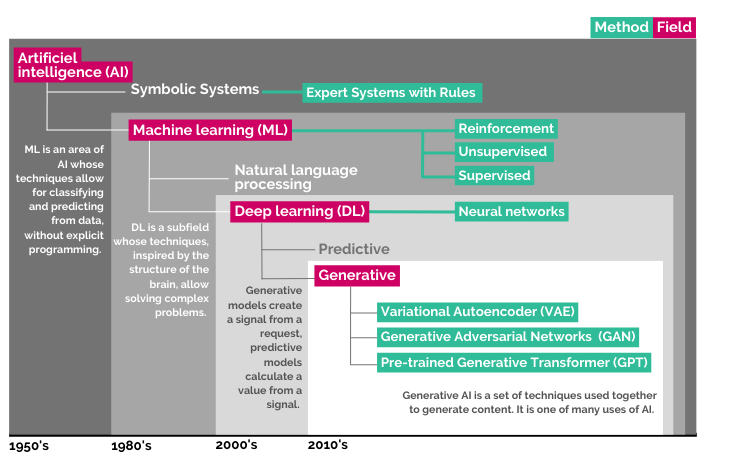

AI is not new. The field encompasses several different technologies, some of which have been around for years or decades.

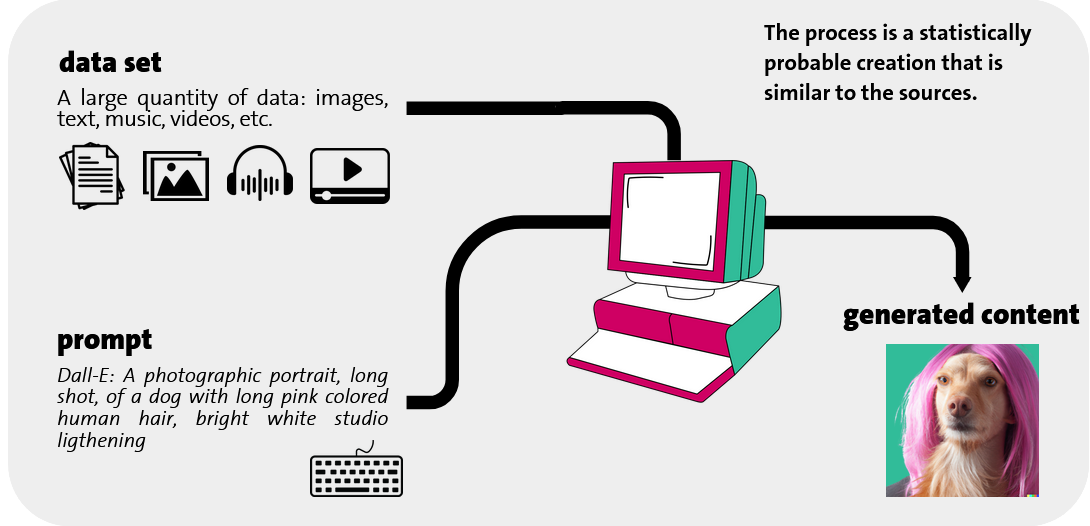

The term generative AI refers to a set of techniques (i.e., algorithms) stemming from the field of artificial intelligence. What is meant by "generative" is the tool's ability to automatically create content from large volumes of existing data on which it is trained. AI does not simply copy and paste what it has analyzed: it imitates, enhances, and creates entirely new content based on a statistical recombination of patterns and structures it identified during its training. This content can include texts, images, music, or computer code.

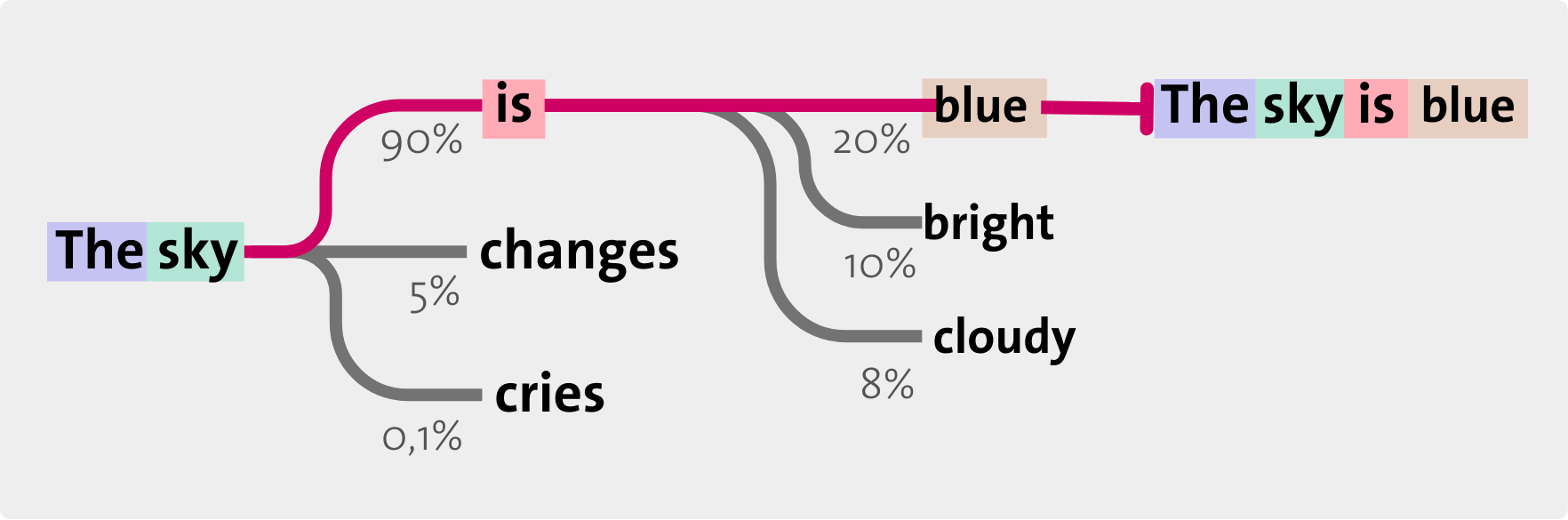

AI learns the rules by analyzing thousands of texts, images or pieces of music (data set). It studies how they are arranged and detects recurring patterns, which it then uses to generate new content based on a query (prompt).

The content generated by AI is statistically recomposed from everything it has learned. It makes sense because it adheres to the grammatical, visual, or musical rules extracted from the training data sets. These rules are memorized in the form of a Large Language Model (LLM, see p. 9). This allows, among other things, the synthesis of information or the proposal of original content.

Several companies are currently major players in the field of AI. Their tools, accessible online, often for free, are currently the most widely used.

Microsoft Research

Established in 1991 with a focus on computer calculations, the subsidiary invested in AI in the 2010s through various acquisitions and now holds 20% of patents. Innovations are regularly added to its products (i.e., Bing, HoloLens, Cortana, 365, Azure, etc.). In 2016, the Microsoft Research AI division was created, and LinkedIn was acquired to provide data for its models. The partnership in 2019 with OpenAI (i.e., cloud resources) was crucial for the development of GPT models.

- In 2023, Microsoft releases Copilot, an AI assistant that integrates with all its services.

IBM

A pioneer, notably with DeepBlue defeating chess champion Kasparov in 1996. In 2011, the Watson program made headlines by winning the game show Jeopardy!, which required understanding the clues, using the buzzer, and responding verbally. After expanding to include the internet, its usage was restricted upon realizing it had developed a habit of reading Wikipedia and regularly used the word "bullshit" in its responses.

- Watson was monetized in 2012 for healthcare, finance, and research. In 2022, considered as a failure, the program was sold to an investment fund.

DeepMind (Google)

Founded in 2010, DeepMind was acquired by Google in 2014 to become its AI subsidiary. Its models draw inspiration from neuroscience to develop learning algorithms. DeepMind focuses on systems capable of playing games. AlphaGo was the first to defeat the world go champion in 2017. Following GPT-3, Google panics and quickly releases its prototype conversational agent.

- The Bard tool is released with the LaMDA model, quickly replaced by PaLM2. Currently, Gemini is the agent being gradually integrated into the Google suite.

Meta AI

Created in 2013, Facebook Artificial Intelligence Research (FAIR) released PyTorch in 2017, an open-source library of machine learning models (used by companies like Tesla or Uber). FAIR was renamed Meta AI after Facebook's reorganization and, in 2023, it published its language model Llama. The model was initially accessible to researchers for non-commercial use upon request before being leaked a month later.

- Llama 2 is a family of open-source language models. Meta does not have publicly accessible tools.

OpenAI

Founded in 2015 as a non-profit organization, OpenAI became a for-profit company in 2019 and established a major partnership with Microsoft. It developed GPT-3, a language model, in 2020 and announced DALL-E for image generation in 2021. In 2022, GPT-3 became accessible online, marking a significant turning point. Thanks to investments from Microsoft, GPT-4 was released in 2023.

- The chatGPT tool is built upon GPT-4, a powerful model capable of processing text and images, as well as performing real-time internet searches.

It is large because it has an enormous number of parameters (on the order of billions), which are pieces of information. It is a model because it is a neural network trained on a large amount of texts to perform non-specific tasks. It is linguistic because it reproduces the syntax and semantics of human natural language by predicting the likely continuation for a certain input. This also allows it to have a "general knowledge" based on the training texts.

Generative AI tools are powered by immense amounts of data to train the algorithms behind the operating models. This data can come from various sources:

- They are massively collected from what is available online.

- Prompts and their responses also constitute data that will serve to feed the model and improve it.

Following the requests made by users, these tools will generate more data from this initial set. Thus, data appears as the crucial element, the raw material in the functioning and use of generative AI. The quality of the data will be an important element of its value.

If data is an object of great value for the tools, it also carries risks of use.

- The produced data is fallible, with consequences for the verification of information and its transparency.

- The inserted data has a sensitivity from which arise issues of security, privacy protection and intellectual property.

Generative AI gives us the illusion of control. However, the tool remains master of many parameters that we do not understand or control, and it is delicate to institutionalize its use. A recent study (Dell’Acqua et al., 2023) discusses the idea of a "jagged" or "irregular" boundary to define the unpredictable limits of generative AI capabilities. Non-linear, incoherent, this limit makes the tool ambivalent. It can either improve or hinder the performance of a task. For example, it produces complex texts, such as poems, but struggles to give a list of words starting with the same letter.

The mistake would be to anthropomorphize generative AI as an assistant whose errors and quirks would be due to a lack of intelligence or being atypical.

It is an entirely new object, and unlike individuals, we cannot extrapolate on the general level of competence from a few tasks because it does not rely on a set of correlated or transversal cognitive resources. There is currently no rule to define its contours.

A particular challenge for UNIGE relates to the rights of individuals, especially regarding personal data, which is not addressed by the University's regulations or by a directive and cannot be due to its complexity. The decision to provide recommendations on this subject is based on the harmful risk to the institution and individuals of not accompanying the use of generative AI tools, whether approved or not, which are nevertheless accessible.